CommunityData:Wikia data

XML Dumps

So far our Wikia projects all use the XML dumps. These are stored on hyak at /com/raw_data/wikia_dumps.

The 2010 wikia dumps are the only ones that approach being complete or reliable.

The next most useful dumps are from WikiTeam and were obtained from archive.org. As of 5-23-2017, the most recent complete dumps [1] from Wikiteam are from December 2014. Mako found some missing data in these dumps and contacted them. They released a patch [2], which we have yet to validate.

Knowing if a dump is valid

The most common problem with dumps is to be truncated. Sometimes some tag does not close and xpat based parsers like python-mediawiki-utils / wikiq will fail. Commonly dumps are truncated after a revision or page for some unknown reason. We assume that an xml dump is an accurate representation of the wiki if it has opening and closing <mediawiki> tags and is otherwise valid xml. Sometimes wikia dumps have funny quirks (they put SHA1s in weird places). We just have to work around these. Consider including and surfacing such fields when building tools for working with mediawiki data so that language objects accurately reflect underlying data.

Obtaining fresh dumps

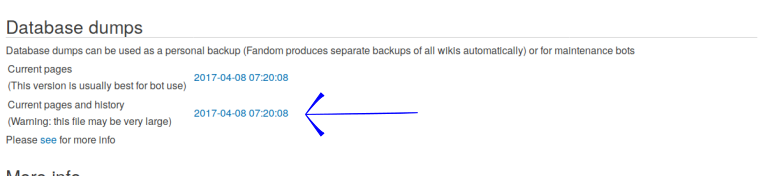

If wikiteam data doesn't suit your needs you probably need to get a dump yourself. The first thing to try is to download it straight from Wikia on the special:statistics page. Note that you need to be logged in to do this.

1. Visit the special:statistics page of the wiki you want to download. e.g. http://althistory.wikia.com/wiki/Special:Statistics

2. Click the link ( it's the timestamp) for "current pages and history:

If this is out of date or doesn't exist then you will have to request a new dump.

Sometimes there is an error. In this case you have to get a new dump from the api[3].