COVID-19 Digital Observatory: Difference between revisions

(→Access Data: add url to datasets folder) |

|||

| Line 23: | Line 23: | ||

We hope that our initial data release from this project will be a good starting point for investigating social computing and social media content related COVID-19. At first, we will focus on providing static datasets (in raw text and structured formats like csv and JSON) from Wikipedia as well as search engine results pages (SERPs) for a set of searches on COVID-19 relevant terms. We are also releasing a list of keywords generated by searching Google trends that with translations into many languages based on Wikidata. We plan to expand upon this initial release with new sources of data including data from Twitter, Reddit, and localized content specific to particular geographic regions. We also plan to build infrastructure to provide rapid and frequent updates of datasets in forms ranging from complete raw data to user-friendly formats. | We hope that our initial data release from this project will be a good starting point for investigating social computing and social media content related COVID-19. At first, we will focus on providing static datasets (in raw text and structured formats like csv and JSON) from Wikipedia as well as search engine results pages (SERPs) for a set of searches on COVID-19 relevant terms. We are also releasing a list of keywords generated by searching Google trends that with translations into many languages based on Wikidata. We plan to expand upon this initial release with new sources of data including data from Twitter, Reddit, and localized content specific to particular geographic regions. We also plan to build infrastructure to provide rapid and frequent updates of datasets in forms ranging from complete raw data to user-friendly formats. | ||

At the moment, the best way to find the data is at [https://covid19.communitydata.science/datasets/ | At the moment, the best way to find the data is at [https://covid19.communitydata.science/datasets/ covid19.comuunhitydata.science/datasets/]. <code>search_results</code> contains compressed raw data generated by Nick Vincent's [https://github.com/nickmvincent/LinkCoordMin SERP scraping project]. <code>wikipedia_views</code> has view counts for Wikipedia pages of COVID19-related articles in <code>.json</code> and <code>.tsv</code> format. <code>keywords</code> has <code>.csv</code> files with COVID-19 related keywords translated into many languages and associated Wikidata item identifiers. | ||

We have a [https://github.com/CommunityDataScienceCollective/COVID-19_Digital_Observatory github repository]. If you want to get involved or start using our work please clone the repository! You'll find example analysis scripts that walk through downloading data directly into something like R and producing some minimal analysis to help you get started. | We have a [https://github.com/CommunityDataScienceCollective/COVID-19_Digital_Observatory github repository]. If you want to get involved or start using our work please clone the repository! You'll find example analysis scripts that walk through downloading data directly into something like R and producing some minimal analysis to help you get started. | ||

Revision as of 18:57, 31 March 2020

| This project page is currently under construction. |

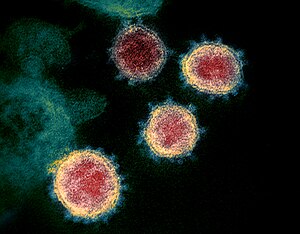

This page documents a digital observatory project that aims to collect, aggregate, distribute, and document public social data from digital communication platforms in relation to the 2019–20 coronavirus pandemic . The project is being coordinated by the Community Data Science Collective and Pushshift.

Overview and objectives

As people struggle to make sense of the COVID-19 pandemic, many of us turn to social media and social computing systems to share information, to understand what's happening, and to find new ways to support one another. We are building a digital observatory to understand where and how people are talking about COVID-19-related topics. The observatory collects, aggregates, and distributes social data related to how people are responding to the ongoing public health crisis of COVID-19. The public datasets and freely licensed tools, techniques, and knowledge created through this project will allow researchers, practitioners, and public health officials to more efficiently gather, analyze, understand, and act to improve these crucial sources of information during crises.

Everything here is a work in progress as we get the project running, create communication channels, and start releasing datasets. Learn how you can stay connected, use our resources as we produce them, and get involved below.

Stay connected

Subscribe to our low traffic announcement mailing list. You can fill out the form on the list website or email covid19-announce-request@communitydata.science with the word 'help' in the subject or body (don't include the quotes). You will get back a message with instructions.

The email list will contain occasional updates, information about new datasets, partnerships, and so on.

Access Data

(coming soon)

We hope that our initial data release from this project will be a good starting point for investigating social computing and social media content related COVID-19. At first, we will focus on providing static datasets (in raw text and structured formats like csv and JSON) from Wikipedia as well as search engine results pages (SERPs) for a set of searches on COVID-19 relevant terms. We are also releasing a list of keywords generated by searching Google trends that with translations into many languages based on Wikidata. We plan to expand upon this initial release with new sources of data including data from Twitter, Reddit, and localized content specific to particular geographic regions. We also plan to build infrastructure to provide rapid and frequent updates of datasets in forms ranging from complete raw data to user-friendly formats.

At the moment, the best way to find the data is at covid19.comuunhitydata.science/datasets/. search_results contains compressed raw data generated by Nick Vincent's SERP scraping project. wikipedia_views has view counts for Wikipedia pages of COVID19-related articles in .json and .tsv format. keywords has .csv files with COVID-19 related keywords translated into many languages and associated Wikidata item identifiers.

We have a github repository. If you want to get involved or start using our work please clone the repository! You'll find example analysis scripts that walk through downloading data directly into something like R and producing some minimal analysis to help you get started.

Search Engine Results Pages (SERP) Data

The SERP data in our initial data release includes the first search result page from Google and Bing for a variety of COVID-19 related terms gathered from Google Trends and Google and Bing's autocomplete "search suggestions." Specifically, using a set of six "stem keywords" about COVID-19 and online communities ("coronavirus", "coronavirus reddit", coronavirus wiki", "covid 19", "covid 19 reddit", and "covid 19 wiki"), we collect related keywords from Google Trends (using open source software[1]) and autocomplete suggestions from Google and Bing (using open source software[2]). In addition to COVID 19 keywords, we also collect SERP data for the top daily trending queries. Currently, the SERP data collection process does not specify location in its searches. Consequently, the default location used is the location of our machine, at Northwestern University's Evanston campus. We are working on collecting SERP data with location specified beyond the Chicago area (aka other 'localized' content).

The SERP data is released as a series of compressed archives (7z), one archive per day, that follow the naming convention covid_search_data-[YYYYMMDD].7z. Within these compressed archives, there is a folder for each device emulated in the data collection (currently two: Chrome on Windows and iPhone X) which contains all of the respective SERP data. Per each device subdirectory, SERP data itself is organized into folders that are titled by the URL of the search query (e.g. 'https---www.google.com-search?q=Krispy Kreme'), and each SERP folder contains three data files:

- a PNG screenshot of the full first page of results,

- an mhtml "snapshot" (https://github.com/puppeteer/puppeteer/issues/3658),

- and a json file with a variety of metadata (e.g. date, the device emulated) and a list of every link (<a>) element in the page with its coordinates (top, left, bottom, right) in pixels.

Wikipedia article pages and edit history

Our initial release provides exhaustive edit data for the list of English Wikipedia articles covered by WikiProject Covid-19.

Wikipedia pageviews

Our initial release provides pageview data for the list of English Wikipedia articles covered by WikiProject Covid-19.

Keywords and search terms

We currently use and provide three different types of keywords and search terms:

- Article names/topics from Wikipedia's WikiProject Covid-19

- Wikidata entities generated via the "Main items" described by Wikidata's WikiProject COVID-19

- Top 25 daily trending search terms from Google and Bing.

Get Help Using Data

(more coming soon)

As we develop data collection resources and datasets, we will also provide simple example analysis scripts to demonstrate how you might access, import, and analyze small subsets of the data we produce. For instance, take a look at the "analysis" subdirectory of the wikipedia views section of our Github project.

We plan develop tutorials and demos for the use of the data we release and particularly welcome contributions that help make these resources more easily usable by others. In some cases, the data sources are quite large and might not be suitable for analysis on your personal computer. Wherever possible, we'll try to point you to useful tools to help make this feasible or easier.

Contribute

We are eager for collaborators and committed to working openly.

- Want to contribute to this wiki? Consider creating an account and be bold!

- Want to contribute to our datasets and/or analysis code? Clone our github repository and pitch in with pull requests.

Code of Conduct

We ask that all contributors adhere to the Contributor Covenant.